Issue of the Week: Economic Opportunity

Why Poverty Persists in America, 3.12.23

Just posted is the upcoming story in the Sunday New York Times Magazine on poverty in America.

As we’ve said often, our oft-revisiting of this source is both a terrible commentary on the shrinking of newspapers and print journalism in America, and deserved high praise for the ongoing finest of journalism (including excerpting of books, as this piece is) to be found at this source.

After the War on Poverty in the early sixties–including the expansion of the social programs of FDR and other new programs under LBJ, poverty was cut in half. But poverty has since stayed at similar persistent rates for 5o years, as part of the process that we have described often that led to the immense inequality of today.

Official poverty rates don’t begin to tell the full tale of poverty because those just above the line or even considerably above it are still suffering and struggling to varying degrees with all the attendant personal and social woes, such as hunger disease, lack of health care, affordability of housing and so on. Poverty always was and always is going to be there to the extent that the system is set up in effect to exploit a certain percentage to the advantage of the rest, in the main, of the wealthy. Of course, as we keep saying, this has never been sustainable, much less moral.

This piece in The New York Times Magazine doesn’t cover all the related issues, but it covers many, tells a riveting story, and puts the focus on the ongoing disgrace of poverty in America.

Here’s an excerpt:

Antipoverty programs work. Each year, millions of families are spared the indignities and hardships of severe deprivation because of these government investments. But our current antipoverty programs cannot abolish poverty by themselves. The Johnson administration started the War on Poverty and the Great Society in 1964. These initiatives constituted a bundle of domestic programs that included the Food Stamp Act, which made food aid permanent; the Economic Opportunity Act, which created Job Corps and Head Start; and the Social Security Amendments of 1965, which founded Medicare and Medicaid and expanded Social Security benefits. Nearly 200 pieces of legislation were signed into law in President Lyndon B. Johnson’s first five years in office, a breathtaking level of activity. And the result? Ten years after the first of these programs were rolled out in 1964, the share of Americans living in poverty was half what it was in 1960.

But the War on Poverty and the Great Society were started during a time when organized labor was strong, incomes were climbing, rents were modest and the fringe banking industry as we know it today didn’t exist. Today multiple forms of exploitation have turned antipoverty programs into something like dialysis, a treatment designed to make poverty less lethal, not to make it disappear.

This means we don’t just need deeper antipoverty investments. We need different ones, policies that refuse to partner with poverty, policies that threaten its very survival. We need to ensure that aid directed at poor people stays in their pockets, instead of being captured by companies whose low wages are subsidized by government benefits, or by landlords who raise the rents as their tenants’ wages rise, or by banks and payday-loan outlets who issue exorbitant fines and fees. Unless we confront the many forms of exploitation that poor families face, we risk increasing government spending only to experience another 50 years of sclerosis in the fight against poverty.

“Why Poverty Persists in America”

A Pulitzer Prize-winning sociologist offers a new explanation for an intractable problem.

In the past 50 years, scientists have mapped the entire human genome and eradicated smallpox. Here in the United States, infant-mortality rates and deaths from heart disease have fallen by roughly 70 percent, and the average American has gained almost a decade of life. Climate change was recognized as an existential threat. The internet was invented.

On the problem of poverty, though, there has been no real improvement — just a long stasis. As estimated by the federal government’s poverty line, 12.6 percent of the U.S. population was poor in 1970; two decades later, it was 13.5 percent; in 2010, it was 15.1 percent; and in 2019, it was 10.5 percent. To graph the share of Americans living in poverty over the past half-century amounts to drawing a line that resembles gently rolling hills. The line curves slightly up, then slightly down, then back up again over the years, staying steady through Democratic and Republican administrations, rising in recessions and falling in boom years.

What accounts for this lack of progress? It cannot be chalked up to how the poor are counted: Different measures spit out the same embarrassing result. When the government began reporting the Supplemental Poverty Measure in 2011, designed to overcome many of the flaws of the Official Poverty Measure, including not accounting for regional differences in costs of living and government benefits, the United States officially gained three million more poor people. Possible reductions in poverty from counting aid like food stamps and tax benefits were more than offset by recognizing how low-income people were burdened by rising housing and health care costs.

The American poor have access to cheap, mass-produced goods, as every American does. But that doesn’t mean they can access what matters most.

Any fair assessment of poverty must confront the breathtaking march of material progress. But the fact that standards of living have risen across the board doesn’t mean that poverty itself has fallen. Forty years ago, only the rich could afford cellphones. But cellphones have become more affordable over the past few decades, and now most Americans have one, including many poor people. This has led observers like Ron Haskins and Isabel Sawhill, senior fellows at the Brookings Institution, to assert that “access to certain consumer goods,” like TVs, microwave ovens and cellphones, shows that “the poor are not quite so poor after all.”

No, it doesn’t. You can’t eat a cellphone. A cellphone doesn’t grant you stable housing, affordable medical and dental care or adequate child care. In fact, as things like cellphones have become cheaper, the cost of the most necessary of life’s necessities, like health care and rent, has increased. From 2000 to 2022 in the average American city, the cost of fuel and utilities increased by 115 percent. The American poor, living as they do in the center of global capitalism, have access to cheap, mass-produced goods, as every American does. But that doesn’t mean they can access what matters most. As Michael Harrington put it 60 years ago: “It is much easier in the United States to be decently dressed than it is to be decently housed, fed or doctored.”

Why, then, when it comes to poverty reduction, have we had 50 years of nothing? When I first started looking into this depressing state of affairs, I assumed America’s efforts to reduce poverty had stalled because we stopped trying to solve the problem. I bought into the idea, popular among progressives, that the election of President Ronald Reagan (as well as that of Prime Minister Margaret Thatcher in the United Kingdom) marked the ascendancy of market fundamentalism, or “neoliberalism,” a time when governments cut aid to the poor, lowered taxes and slashed regulations. If American poverty persisted, I thought, it was because we had reduced our spending on the poor. But I was wrong.

Reagan expanded corporate power, deeply cut taxes on the rich and rolled back spending on some antipoverty initiatives, especially in housing. But he was unable to make large-scale, long-term cuts to many of the programs that make up the American welfare state. Throughout Reagan’s eight years as president, antipoverty spending grew, and it continued to grow after he left office. Spending on the nation’s 13 largest means-tested programs — aid reserved for Americans who fall below a certain income level — went from $1,015 a person the year Reagan was elected president to $3,419 a person one year into Donald Trump’s administration, a 237 percent increase.

Most of this increase was due to health care spending, and Medicaid in particular. But even if we exclude Medicaid from the calculation, we find that federal investments in means-tested programs increased by 130 percent from 1980 to 2018, from $630 to $1,448 per person.

“Neoliberalism” is now part of the left’s lexicon, but I looked in vain to find it in the plain print of federal budgets, at least as far as aid to the poor was concerned. There is no evidence that the United States has become stingier over time. The opposite is true.

This makes the country’s stalled progress on poverty even more baffling. Decade after decade, the poverty rate has remained flat even as federal relief has surged.

If we have more than doubled government spending on poverty and achieved so little, one reason is that the American welfare state is a leaky bucket. Take welfare, for example: When it was administered through the Aid to Families With Dependent Children program, almost all of its funds were used to provide single-parent families with cash assistance. But when President Bill Clinton reformed welfare in 1996, replacing the old model with Temporary Assistance for Needy Families (TANF), he transformed the program into a block grant that gives states considerable leeway in deciding how to distribute the money. As a result, states have come up with rather creative ways to spend TANF dollars. Arizona has used welfare money to pay for abstinence-only sex education. Pennsylvania diverted TANF funds to anti-abortion crisis-pregnancy centers. Maine used the money to support a Christian summer camp. Nationwide, for every dollar budgeted for TANF in 2020, poor families directly received just 22 cents.

We’ve approached the poverty question by pointing to poor people themselves, when we should have been focusing on exploitation.

Poverty in America

- A Persistent Problem: Over the past 50 years, there has been no real progress on how to address poverty in the United States. A Pulitzer Prize-winning sociologist offers a new explanation for an intractable problem.

- A Sharp Drop in Child Poverty: With little notice and accelerating speed, America’s children have become much less poor. An expanded government safety net has played a critical role.

- Teen Births: Teen pregnancies have plummeted. Combined with the drop in child poverty, this has caused a profound change in the forces that bring opportunity between generations.

- Elder Poverty Rises: The poverty rate for older Americans increased in 2021, even as it sank for everyone else. Experts worry it may signal a broader setback in seniors’ financial security.

A fair amount of government aid earmarked for the poor never reaches them. But this does not fully solve the puzzle of why poverty has been so stubbornly persistent, because many of the country’s largest social-welfare programs distribute funds directly to people. Roughly 85 percent of the Supplemental Nutrition Assistance Program budget is dedicated to funding food stamps themselves, and almost 93 percent of Medicaid dollars flow directly to beneficiaries.

There are, it would seem, deeper structural forces at play, ones that have to do with the way the American poor are routinely taken advantage of. The primary reason for our stalled progress on poverty reduction has to do with the fact that we have not confronted the unrelenting exploitation of the poor in the labor, housing and financial markets.

As a theory of poverty, “exploitation” elicits a muddled response, causing us to think of course and but, no in the same instant. The word carries a moral charge, but social scientists have a fairly coolheaded way to measure exploitation: When we are underpaid relative to the value of what we produce, we experience labor exploitation; when we are overcharged relative to the value of something we purchase, we experience consumer exploitation. For example, if a family paid $1,000 a month to rent an apartment with a market value of $20,000, that family would experience a higher level of renter exploitation than a family who paid the same amount for an apartment with a market valuation of $100,000. When we don’t own property or can’t access credit, we become dependent on people who do and can, which in turn invites exploitation, because a bad deal for you is a good deal for me.

Our vulnerability to exploitation grows as our liberty shrinks. Because undocumented workers are not protected by labor laws, more than a third are paid below minimum wage, and nearly 85 percent are not paid overtime. Many of us who are U.S. citizens, or who crossed borders through official checkpoints, would not work for these wages. We don’t have to. If they migrate here as adults, those undocumented workers choose the terms of their arrangement. But just because desperate people accept and even seek out exploitative conditions doesn’t make those conditions any less exploitative. Sometimes exploitation is simply the best bad option.

Consider how many employers now get one over on American workers. The United States offers some of the lowest wages in the industrialized world. A larger share of workers in the United States make “low pay” — earning less than two-thirds of median wages — than in any other country belonging to the Organization for Economic Cooperation and Development. According to the group, nearly 23 percent of American workers labor in low-paying jobs,compared with roughly 17 percent in Britain, 11 percent in Japan and 5 percent in Italy. Poverty wages have swollen the ranks of the American working poor, most of whom are 35 or older.

One popular theory for the loss of good jobs is deindustrialization, which caused the shuttering of factories and the hollowing out of communities that had sprung up around them. Such a passive word, “deindustrialization” — leaving the impression that it just happened somehow, as if the country got deindustrialization the way a forest gets infested by bark beetles. But economic forces framed as inexorable, like deindustrialization and the acceleration of global trade, are often helped along by policy decisions like the 1994 North American Free Trade Agreement, which made it easier for companies to move their factories to Mexico and contributed to the loss of hundreds of thousands of American jobs. The world has changed, but it has changed for other economies as well. Yet Belgium and Canada and many other countries haven’t experienced the kind of wage stagnation and surge in income inequality that the United States has.

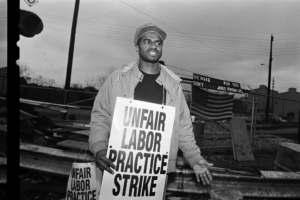

Those countries managed to keep their unions. We didn’t. Throughout the 1950s and 1960s, nearly a third of all U.S. workers carried union cards. These were the days of the United Automobile Workers, led by Walter Reuther, once savagely beaten by Ford’s brass-knuckle boys, and of the mighty American Federation of Labor and Congress of Industrial Organizations that together represented around 15 million workers, more than the population of California at the time.

In their heyday, unions put up a fight. In 1970 alone, 2.4 million union members participated in work stoppages, wildcat strikes and tense standoffs with company heads. The labor movement fought for better pay and safer working conditions and supported antipoverty policies. Their efforts paid off for both unionized and nonunionized workers, as companies like Eastman Kodak were compelled to provide generous compensation and benefits to their workers to prevent them from organizing. By one estimate, the wages of nonunionized men without a college degree would be 8 percent higher today if union strength remained what it was in the late 1970s, a time when worker pay climbed, chief-executive compensation was reined in and the country experienced the most economically equitable period in modern history.

It is important to note that Old Labor was often a white man’s refuge. In the 1930s, many unions outwardly discriminated against Black workers or segregated them into Jim Crow local chapters. In the 1960s, unions like the Brotherhood of Railway and Steamship Clerks and the United Brotherhood of Carpenters and Joiners of America enforced segregation within their ranks. Unions harmed themselves through their self-defeating racism and were further weakened by a changing economy. But organized labor was also attacked by political adversaries. As unions flagged, business interests sensed an opportunity. Corporate lobbyists made deep inroads in both political parties, beginning a public-relations campaign that pressured policymakers to roll back worker protections.

A national litmus test arrived in 1981, when 13,000 unionized air traffic controllers left their posts after contract negotiations with the Federal Aviation Administration broke down. When the workers refused to return, Reagan fired all of them. The public’s response was muted, and corporate America learned that it could crush unions with minimal blowback. And so it went, in one industry after another.

Today almost all private-sector employees (94 percent) are without a union, though roughly half of nonunion workers say they would organize if given the chance. They rarely are. Employers have at their disposal an arsenal of tactics designed to prevent collective bargaining, from hiring union-busting firms to telling employees that they could lose their jobs if they vote yes. Those strategies are legal, but companies also make illegal moves to block unions, like disciplining workers for trying to organize or threatening to close facilities. In 2016 and 2017, the National Labor Relations Board charged 42 percent of employers with violating federal law during union campaigns. In nearly a third of cases, this involved illegally firing workers for organizing.

Corporate lobbyists told us that organized labor was a drag on the economy — that once the companies had cleared out all these fusty, lumbering unions, the economy would rev up, raising everyone’s fortunes. But that didn’t come to pass. The negative effects of unions have been wildly overstated, and there is now evidence that unions play a role in increasing company productivity, for example by reducing turnover. The U.S. Bureau of Labor Statistics measures productivity as how efficiently companies turn inputs (like materials and labor) into outputs (like goods and services). Historically, productivity, wages and profits rise and fall in lock step. But the American economy is less productive today than it was in the post-World War II period, when unions were at peak strength. The economies of other rich countries have slowed as well, including those with more highly unionized work forces, but it is clear that diluting labor power in America did not unleash economic growth or deliver prosperity to more people. “We were promised economic dynamism in exchange for inequality,” Eric Posner and Glen Weyl write in their book “Radical Markets.” “We got the inequality, but dynamism is actually declining.”

As workers lost power, their jobs got worse. For several decades after World War II, ordinary workers’ inflation-adjusted wages (known as “real wages”) increased by 2 percent each year. But since 1979, real wages have grown by only 0.3 percent a year. Astonishingly, workers with a high school diploma made 2.7 percent less in 2017 than they would have in 1979, adjusting for inflation. Workers without a diploma made nearly 10 percent less.

Lousy, underpaid work is not an indispensable, if regrettable, byproduct of capitalism, as some business defenders claim today. (This notion would have scandalized capitalism’s earliest defenders. John Stuart Mill, arch advocate of free people and free markets, once said that if widespread scarcity was a hallmark of capitalism, he would become a communist.) But capitalism is inherently about owners trying to give as little, and workers trying to get as much, as possible. With unions largely out of the picture, corporations have chipped away at the conventional midcentury work arrangement, which involved steady employment, opportunities for advancement and raises and decent pay with some benefits.

As the sociologist Gerald Davis has put it: Our grandparents had careers. Our parents had jobs. We complete tasks. Or at least that has been the story of the American working class and working poor.

Poor Americans aren’t just exploited in the labor market. They face consumer exploitation in the housing and financial markets as well.

There is a long history of slum exploitation in America. Money made slums because slums made money. Rent has more than doubled over the past two decades, rising much faster than renters’ incomes. Median rent rose from $483 in 2000 to $1,216 in 2021. Why have rents shot up so fast? Experts tend to offer the same rote answers to this question. There’s not enough housing supply, they say, and too much demand. Landlords must charge more just to earn a decent rate of return. Must they? How do we know?

We need more housing; no one can deny that. But rents have jumped even in cities with plenty of apartments to go around. At the end of 2021, almost 19 percent of rental units in Birmingham, Ala., sat vacant, as did 12 percent of those in Syracuse, N.Y. Yet rent in those areas increased by roughly 14 percent and 8 percent, respectively, over the previous two years. National data also show that rental revenues have far outpaced property owners’ expenses in recent years, especially for multifamily properties in poor neighborhoods. Rising rents are not simply a reflection of rising operating costs. There’s another dynamic at work, one that has to do with the fact that poor people — and particularly poor Black families — don’t have much choice when it comes to where they can live. Because of that, landlords can overcharge them, and they do.

A study I published with Nathan Wilmers found that after accounting for all costs, landlords operating in poor neighborhoods typically take in profits that are double those of landlords operating in affluent communities. If down-market landlords make more, it’s because their regular expenses (especially their mortgages and property-tax bills) are considerably lower than those in upscale neighborhoods. But in many cities with average or below-average housing costs — think Buffalo, not Boston — rents in the poorest neighborhoods are not drastically lower than rents in the middle-class sections of town. From 2015 to 2019, median monthly rent for a two-bedroom apartment in the Indianapolis metropolitan area was $991; it was $816 in neighborhoods with poverty rates above 40 percent, just around 17 percent less. Rents are lower in extremely poor neighborhoods, but not by as much as you would think.

Yet where else can poor families live? They are shut out of homeownership because banks are disinclined to issue small-dollar mortgages, and they are also shut out of public housing, which now has waiting lists that stretch on for years and even decades. Struggling families looking for a safe, affordable place to live in America usually have but one choice: to rent from private landlords and fork over at least half their income to rent and utilities. If millions of poor renters accept this state of affairs, it’s not because they can’t afford better alternatives; it’s because they often aren’t offered any.

You can read injunctions against usury in the Vedic texts of ancient India, in the sutras of Buddhism and in the Torah. Aristotle and Aquinas both rebuked it. Dante sent moneylenders to the seventh circle of hell. None of these efforts did much to stem the practice, but they do reveal that the unprincipled act of trapping the poor in a cycle of debt has existed at least as long as the written word. It might be the oldest form of exploitation after slavery. Many writers have depicted America’s poor as unseen, shadowed and forgotten people: as “other” or “invisible.” But markets have never failed to notice the poor, and this has been particularly true of the market for money itself.

The deregulation of the banking system in the 1980s heightened competition among banks. Many responded by raising fees and requiring customers to carry minimum balances. In 1977, over a third of banks offered accounts with no service charge. By the early 1990s, only 5 percent did. Big banks grew bigger as community banks shuttered, and in 2021, the largest banks in America charged customers almost $11 billion in overdraft fees. Just 9 percent of account holders paid 84 percent of these fees. Who were the unlucky 9 percent? Customers who carried an average balance of less than $350. The poor were made to pay for their poverty.

In 2021, the average fee for overdrawing your account was $33.58. Because banks often issue multiple charges a day, it’s not uncommon to overdraw your account by $20 and end up paying $200 for it. Banks could (and do) deny accounts to people who have a history of overextending their money, but those customers also provide a steady revenue stream for some of the most powerful financial institutions in the world.

Every year: almost $11 billion in overdraft fees, $1.6 billion in check-cashing fees and up to $8.2 billion in payday-loan fees.

According to the F.D.I.C., one in 19 U.S. households had no bank account in 2019, amounting to more than seven million families. Compared with white families, Black and Hispanic families were nearly five times as likely to lack a bank account. Where there is exclusion, there is exploitation. Unbanked Americans have created a market, and thousands of check-cashing outlets now serve that market. Check-cashing stores generally charge from 1 to 10 percent of the total, depending on the type of check. That means that a worker who is paid $10 an hour and takes a $1,000 check to a check-cashing outlet will pay $10 to $100 just to receive the money he has earned, effectively losing one to 10 hours of work. (For many, this is preferable to the less-predictable exploitation by traditional banks, with their automatic overdraft fees. It’s the devil you know.) In 2020, Americans spent $1.6 billion just to cash checks. If the poor had a costless way to access their own money, over a billion dollars would have remained in their pockets during the pandemic-induced recession.

Poverty can mean missed payments, which can ruin your credit. But just as troublesome as bad credit is having no credit score at all, which is the case for 26 million adults in the United States. Another 19 million possess a credit history too thin or outdated to be scored. Having no credit (or bad credit) can prevent you from securing an apartment, buying insurance and even landing a job, as employers are increasingly relying on credit checks during the hiring process. And when the inevitable happens — when you lose hours at work or when the car refuses to start — the payday-loan industry steps in.

For most of American history, regulators prohibited lending institutions from charging exorbitant interest on loans. Because of these limits, banks kept interest rates between 6 and 12 percent and didn’t do much business with the poor, who in a pinch took their valuables to the pawnbroker or the loan shark. But the deregulation of the banking sector in the 1980s ushered the money changers back into the temple by removing strict usury limits. Interest rates soon reached 300 percent, then 500 percent, then 700 percent. Suddenly, some people were very interested in starting businesses that lent to the poor. In recent years, 17 states have brought back strong usury limits, capping interest rates and effectively prohibiting payday lending. But the trade thrives in most places. The annual percentage rate for a two-week $300 loan can reach 460 percent in California, 516 percent in Wisconsin and 664 percent in Texas.

Roughly a third of all payday loans are now issued online, and almost half of borrowers who have taken out online loans have had lenders overdraw their bank accounts. The average borrower stays indebted for five months, paying $520 in fees to borrow $375. Keeping people indebted is, of course, the ideal outcome for the payday lender. It’s how they turn a $15 profit into a $150 one. Payday lenders do not charge high fees because lending to the poor is risky — even after multiple extensions, most borrowers pay up. Lenders extort because they can.

Every year: almost $11 billion in overdraft fees, $1.6 billion in check-cashing fees and up to $8.2 billion in payday-loan fees. That’s more than $55 million in fees collected predominantly from low-income Americans each day — not even counting the annual revenue collected by pawnshops and title loan services and rent-to-own schemes. When James Baldwin remarked in 1961 how “extremely expensive it is to be poor,” he couldn’t have imagined these receipts.

“Predatory inclusion” is what the historian Keeanga-Yamahtta Taylor calls it in her book “Race for Profit,” describing the longstanding American tradition of incorporating marginalized people into housing and financial schemes through bad deals when they are denied good ones. The exclusion of poor people from traditional banking and credit systems has forced them to find alternative ways to cash checks and secure loans, which has led to a normalization of their exploitation. This is all perfectly legal, after all, and subsidized by the nation’s richest commercial banks. The fringe banking sector would not exist without lines of credit extended by the conventional one. Wells Fargo and JPMorgan Chase bankroll payday lenders like Advance America and Cash America. Everybody gets a cut.

Poverty isn’t simply the condition of not having enough money. It’s the condition of not having enough choice and being taken advantage of because of that. When we ignore the role that exploitation plays in trapping people in poverty, we end up designing policy that is weak at best and ineffective at worst. For example, when legislation lifts incomes at the bottom without addressing the housing crisis, those gains are often realized instead by landlords, not wholly by the families the legislation was intended to help. A 2019 study conducted by the Federal Reserve Bank of Philadelphia found that when states raised minimum wages, families initially found it easier to pay rent. But landlords quickly responded to the wage bumps by increasing rents, which diluted the effect of the policy. This happened after the pandemic rescue packages, too: When wages began to rise in 2021 after worker shortages, rents rose as well, and soon people found themselves back where they started or worse.

Antipoverty programs work. Each year, millions of families are spared the indignities and hardships of severe deprivation because of these government investments. But our current antipoverty programs cannot abolish poverty by themselves. The Johnson administration started the War on Poverty and the Great Society in 1964. These initiatives constituted a bundle of domestic programs that included the Food Stamp Act, which made food aid permanent; the Economic Opportunity Act, which created Job Corps and Head Start; and the Social Security Amendments of 1965, which founded Medicare and Medicaid and expanded Social Security benefits. Nearly 200 pieces of legislation were signed into law in President Lyndon B. Johnson’s first five years in office, a breathtaking level of activity. And the result? Ten years after the first of these programs were rolled out in 1964, the share of Americans living in poverty was half what it was in 1960.

But the War on Poverty and the Great Society were started during a time when organized labor was strong, incomes were climbing, rents were modest and the fringe banking industry as we know it today didn’t exist. Today multiple forms of exploitation have turned antipoverty programs into something like dialysis, a treatment designed to make poverty less lethal, not to make it disappear.

This means we don’t just need deeper antipoverty investments. We need different ones, policies that refuse to partner with poverty, policies that threaten its very survival. We need to ensure that aid directed at poor people stays in their pockets, instead of being captured by companies whose low wages are subsidized by government benefits, or by landlords who raise the rents as their tenants’ wages rise, or by banks and payday-loan outlets who issue exorbitant fines and fees. Unless we confront the many forms of exploitation that poor families face, we risk increasing government spending only to experience another 50 years of sclerosis in the fight against poverty.

The best way to address labor exploitation is to empower workers. A renewed contract with American workers should make organizing easy. As things currently stand, unionizing a workplace is incredibly difficult. Under current labor law, workers who want to organize must do so one Amazon warehouse or one Starbucks location at a time. We have little chance of empowering the nation’s warehouse workers and baristas this way. This is why many new labor movements are trying to organize entire sectors. The Fight for $15 campaign, led by the Service Employees International Union, doesn’t focus on a single franchise (a specific McDonald’s store) or even a single company (McDonald’s) but brings together workers from several fast-food chains. It’s a new kind of labor power, and one that could be expanded: If enough workers in a specific economic sector — retail, hotel services, nursing — voted for the measure, the secretary of labor could establish a bargaining panel made up of representatives elected by the workers. The panel could negotiate with companies to secure the best terms for workers across the industry. This is a way to organize all Amazon warehouses and all Starbucks locations in a single go.

Sectoral bargaining, as it’s called, would affect tens of millions of Americans who have never benefited from a union of their own, just as it has improved the lives of workers in Europe and Latin America. The idea has been criticized by members of the business community, like the U.S. Chamber of Commerce, which has raised concerns about the inflexibility and even the constitutionality of sectoral bargaining, as well as by labor advocates, who fear that industrywide policies could nullify gains that existing unions have made or could be achieved only if workers make other sacrifices. Proponents of the idea counter that sectoral bargaining could even the playing field, not only between workers and bosses, but also between companies in the same sector that would no longer be locked into a race to the bottom, with an incentive to shortchange their work force to gain a competitive edge. Instead, the companies would be forced to compete over the quality of the goods and services they offer. Maybe we would finally reap the benefits of all that economic productivity we were promised.

We must also expand the housing options for low-income families. There isn’t a single right way to do this, but there is clearly a wrong way: the way we’re doing it now. One straightforward approach is to strengthen our commitment to the housing programs we already have. Public housing provides affordable homes to millions of Americans, but it’s drastically underfunded relative to the need. When the wealthy township of Cherry Hill, N.J., opened applications for 29 affordable apartments in 2021, 9,309 people applied. The sky-high demand should tell us something, though: that affordable housing is a life changer, and families are desperate for it.

We could also pave the way for more Americans to become homeowners, an initiative that could benefit poor, working-class and middle-class families alike — as well as scores of young people. Banks generally avoid issuing small-dollar mortgages, not because they’re riskier — these mortgages have the same delinquency rates as larger mortgages — but because they’re less profitable. Over the life of a mortgage, interest on $1 million brings in a lot more money than interest on $75,000. This is where the federal government could step in, providing extra financing to build on-ramps to first-time homeownership. In fact, it already does so in rural America through the 502 Direct Loan Program, which has moved more than two million families into their own homes. These loans, fully guaranteed and serviced by the Department of Agriculture, come with low interest rates and, for very poor families, cover the entire cost of the mortgage, nullifying the need for a down payment. Last year, the average 502 Direct Loan was for $222,300 but cost the government only $10,370 per loan, chump change for such a durable intervention. Expanding a program like this into urban communities would provide even more low- and moderate-income families with homes of their own.

We should also ensure fair access to capital. Banks should stop robbing the poor and near-poor of billions of dollars each year, immediately ending exorbitant overdraft fees. As the legal scholar Mehrsa Baradaran has pointed out, when someone overdraws an account, banks could simply freeze the transaction or could clear a check with insufficient funds, providing customers a kind of short-term loan with a low interest rate of, say, 1 percent a day.

States should rein in payday-lending institutions and insist that lenders make it clear to potential borrowers what a loan is ultimately likely to cost them. Just as fast-food restaurants must now publish calorie counts next to their burgers and shakes, payday-loan stores should publish the average overall cost of different loans. When Texas adopted disclosure rules, residents took out considerably fewer bad loans. If Texas can do this, why not California or Wisconsin? Yet to stop financial exploitation, we need to expand, not limit, low-income Americans’ access to credit. Some have suggested that the government get involved by having the U.S. Postal Service or the Federal Reserve issue small-dollar loans. Others have argued that we should revise government regulations to entice commercial banks to pitch in. Whatever our approach, solutions should offer low-income Americans more choice, a way to end their reliance on predatory lending institutions that can get away with robbery because they are the only option available.

In Tommy Orange’s novel, “There There,” a man trying to describe the problem of suicides on Native American reservations says: “Kids are jumping out the windows of burning buildings, falling to their deaths. And we think the problem is that they’re jumping.” The poverty debate has suffered from a similar kind of myopia. For the past half-century, we’ve approached the poverty question by pointing to poor people themselves — posing questions about their work ethic, say, or their welfare benefits — when we should have been focusing on the fire. The question that should serve as a looping incantation, the one we should ask every time we drive past a tent encampment, those tarped American slums smelling of asphalt and bodies, or every time we see someone asleep on the bus, slumped over in work clothes, is simply: Who benefits? Not: Why don’t you find a better job? Or: Why don’t you move? Or: Why don’t you stop taking out payday loans? But: Who is feeding off this?

Those who have amassed the most power and capital bear the most responsibility for America’s vast poverty: political elites who have utterly failed low-income Americans over the past half-century; corporate bosses who have spent and schemed to prioritize profits over families; lobbyists blocking the will of the American people with their self-serving interests; property owners who have exiled the poor from entire cities and fueled the affordable-housing crisis. Acknowledging this is both crucial and deliciously absolving; it directs our attention upward and distracts us from all the ways (many unintentional) that we — we the secure, the insured, the housed, the college-educated, the protected, the lucky — also contribute to the problem.

Corporations benefit from worker exploitation, sure, but so do consumers, who buy the cheap goods and services the working poor produce, and so do those of us directly or indirectly invested in the stock market. Landlords are not the only ones who benefit from housing exploitation; many homeowners do, too, their property values propped up by the collective effort to make housing scarce and expensive. The banking and payday-lending industries profit from the financial exploitation of the poor, but so do those of us with free checking accounts, as those accounts are subsidized by billions of dollars in overdraft fees.

Living our daily lives in ways that express solidarity with the poor could mean we pay more; anti-exploitative investing could dampen our stock portfolios. By acknowledging those costs, we acknowledge our complicity. Unwinding ourselves from our neighbors’ deprivation and refusing to live as enemies of the poor will require us to pay a price. It’s the price of our restored humanity and renewed country.

Matthew Desmond is a professor of sociology at Princeton University and a contributing writer for the magazine. His latest book, “Poverty, by America,” from which this article is adapted, is being published on March 21 by Crown.